1.2 📜 A Brief History of Language Models: From N-Grams to Transformers

💡 Before GPT-4, there was a time when AI couldn't even form a proper sentence. Ever wondered how we went from basic text prediction to AI models that can write essays, generate code and create poems?

In this blog, Obito & Rin will explore:

✅ How early models like N-Grams tried to predict text

✅ Why RNNs & LSTMs were a step forward (but had major flaws)

✅ How Transformers changed everything

Let’s go back in time.

👩💻 Rin: "Obito, LLMs like GPT-4 seem crazy smart. But how did we get here?"

👨💻 Obito: "It wasn’t always this way. The early days of AI language models were… rough."

👩💻 Rin: "How rough? Like bad autocomplete rough?"

👨💻 Obito: "Worse. Think predicting words without context. Let’s start from the beginning."

🔢 1960s–1990s: The Age of N-Grams & Markov Models

👨💻 Obito: "The earliest language models were based on statistics, not deep learning."

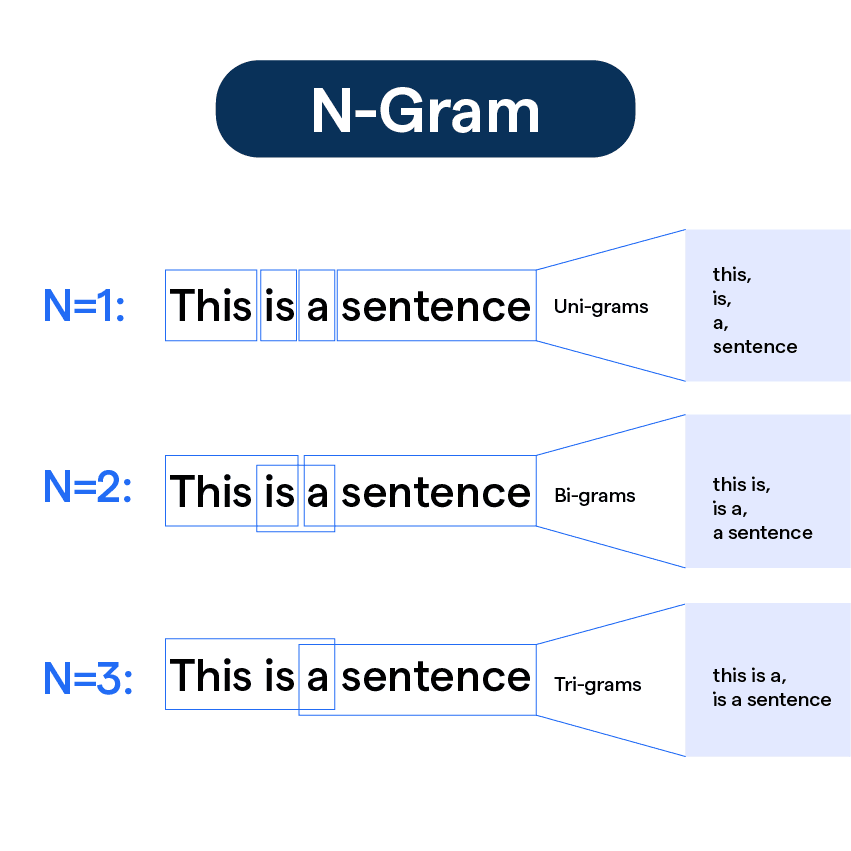

📌 How N-Grams Work:

N-Gram models predict the next word based on the previous N-1 words.

If N=2, it’s a bigram model (predicts next word using 1 previous word).

If N=3, it’s a trigram model (predicts using 2 previous words).

🔹 Example: Predicting the next word with a Trigram Model

Input: "The cat sat on the"

Predicted next word: "mat" (based on frequency in training data)👩💻 Rin: "So it just guesses the most common next word based on past sequences?"

👨💻 Obito: "Exactly! But the problem is, N-Grams have no memory beyond N words."

👩💻 Rin: "So if N=3, it has no clue what came before that?"

👨💻 Obito: "Yep. That’s why N-Gram models struggle with long-term coherence."

🔹 Further Reading: Understanding N-Grams in NLP

🔄 1990s–2010: The Rise of Recurrent Neural Networks (RNNs)

👩💻 Rin: "Okay, but AI today remembers long conversations. How did we move past N-Grams?"

👨💻 Obito: "That’s where Recurrent Neural Networks (RNNs) came in."

📌 How RNNs Work:

✅ Process sentences sequentially (one word at a time)

✅ Remember past words using a hidden state

✅ Used for early chatbots, speech recognition, and translation

🔹 Example: RNN Processing a Sentence

"The cat sat on the" (hidden state carries memory of past words)👩💻 Rin: "Finally! AI that remembers full sentences!"

👨💻 Obito: "Yes, but RNNs have a major flaw—they forget long-term context."

👩💻 Rin: "Wait, what? But humans remember context naturally!"

👨💻 Obito: "Exactly why RNNs weren’t enough. They suffer from the vanishing gradient problem—meaning they struggle to remember words from long ago in a sentence."

🧠 2015–2017: Enter LSTMs & GRUs – Fixing RNNs’ Memory

👩💻 Rin: "So how did we fix RNNs?"

👨💻 Obito: "LSTMs (Long Short-Term Memory) networks! They added a memory cell that lets the model decide what to remember and what to forget."

📌 How LSTMs Work:

✅ Store long-term dependencies

✅ Use gates to control memory flow

✅ Improved machine translation & chatbots

👩💻 Rin: "So now AI can remember text from much earlier in a conversation?"

👨💻 Obito: "Exactly! But LSTMs are still slow and hard to scale. Enter... Transformers."

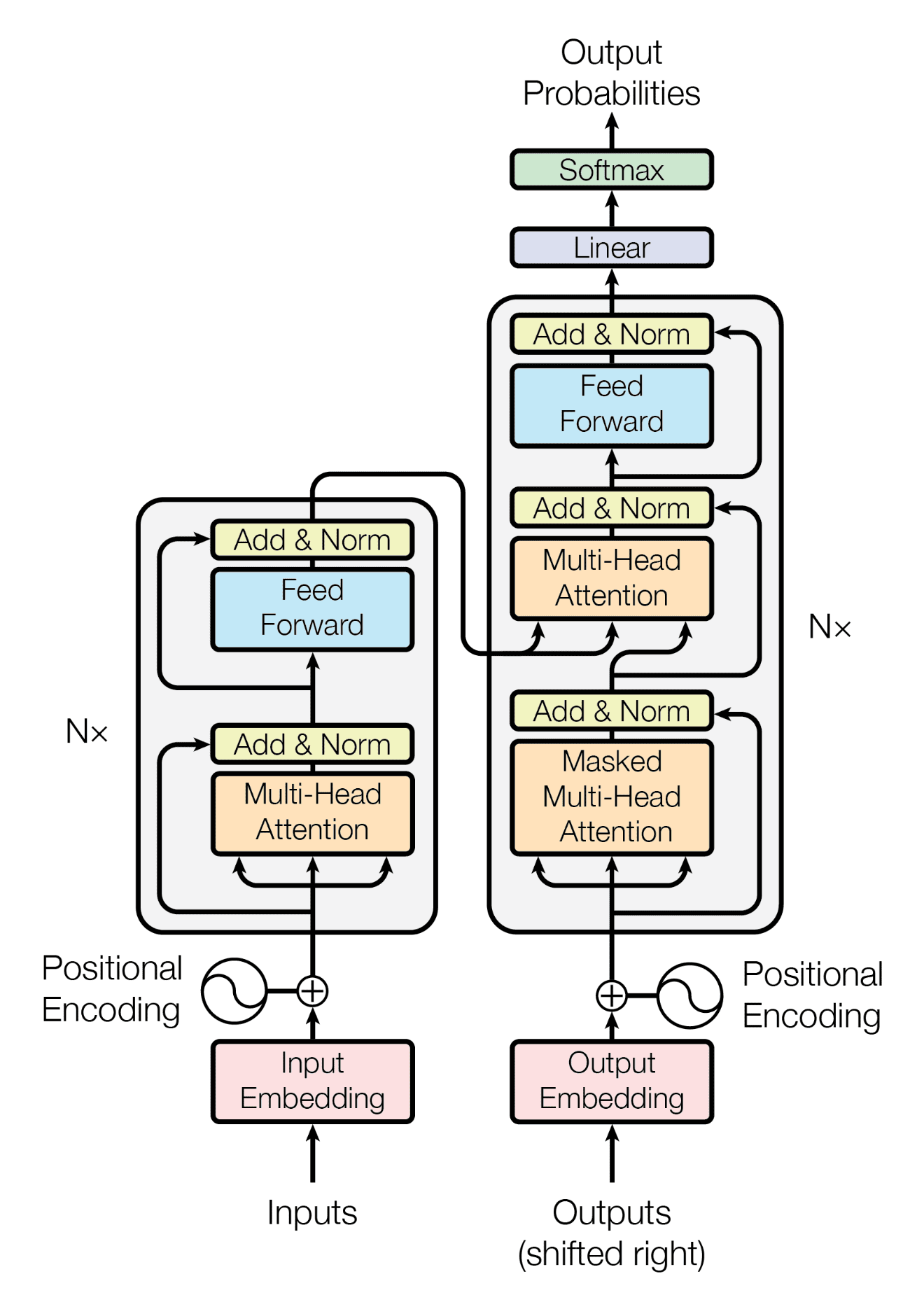

⚡ 2017–Present: Transformers Revolutionize AI

👩💻 Rin: "Okay, Obito, this is where it gets exciting. What are Transformers?"

👨💻 Obito: "Transformers are the architecture behind LLMs like GPT and BERT. Instead of processing text sequentially like RNNs, they use self-attention to analyze all words at once."

📌 Key Innovations of Transformers:

✅ Self-Attention Mechanism → Models can focus on important words dynamically

✅ Parallel Processing → Faster training than RNNs

✅ Scalability → Handles massive datasets with billions of parameters

👩💻 Rin: "So instead of remembering words one by one like RNNs, Transformers see the whole sentence at once?"

👨💻 Obito: "Bingo! That’s why they work so well for long documents, translations, and conversations."

🎯 Final Thoughts: How We Got Here

👩💻 Rin: "So let me get this straight—AI started with N-Grams, improved with RNNs, got better with LSTMs, and then Transformers changed everything?"

👨💻 Obito: "Exactly! And now, models like GPT-4 are pushing the boundaries with billions of parameters."

📌 The Evolution of Language Models:

✅ N-Grams → Fast but short memory

✅ RNNs → Sequential learning but forgets long-term context

✅ LSTMs → Better memory but slow

✅ Transformers → Parallel, scalable, and state-of-the-art

👩💻 Rin: "And what comes after Transformers?"

👨💻 Obito: "That’s a future topic! But next, we dive into how Transformers work internally."

🔗 What’s Next in the Series?

📌 Next: 🧠 Understanding Neural Networks: The Foundation of LLMs

📌 Previous: What Are Large Language Models? A Beginner’s Guide

🚀 Want More AI Deep Dives?

🚀 Follow BinaryBanter on Substack, Medium | 💻 Learn. Discuss. Banter.